Annotation Cross-Labeling For Autonomous Control Systems

Patent No. US11361457 (titled "Annotation Cross-Labeling For Autonomous Control Systems") was filed by Tesla Inc on Jul 17, 2019.

What is this patent about?

’457 is related to the field of autonomous vehicle systems and, more specifically, to the training of computer models used in these systems. Autonomous vehicles rely on sensors like cameras and LIDAR to perceive their surroundings. Training the computer models that interpret this sensor data requires accurately labeled data, a process known as annotation. However, annotating 3D data from sensors like LIDAR is often more difficult and computationally expensive than annotating 2D images from cameras.

The underlying idea behind ’457 is to leverage the relative ease of annotating 2D camera images to assist in the annotation of 3D LIDAR data. The system uses an existing annotation (e.g., a bounding box) in a 2D image to define a 3D spatial region of interest in the corresponding LIDAR point cloud. This region is then searched to find the corresponding object in the 3D data, effectively reducing the search space and computational burden.

The claims of ’457 focus on a method, system, and storage medium for annotating 3D sensor data using 2D image annotations. Specifically, the claims cover obtaining a 2D image and a 3D point cloud of the same scene, identifying an annotation (bounding box) in the 2D image, determining a frustum-shaped spatial region in the 3D space based on the 2D annotation and camera viewpoint, and then annotating the 3D point cloud within that frustum to locate the corresponding object.

In practice, the system first identifies an object of interest in a camera image, perhaps a pedestrian or another vehicle. Because the camera's position and orientation relative to the LIDAR sensor are known, the system can project the 2D bounding box from the image into the 3D space of the LIDAR data. This projection creates a viewing frustum, a truncated pyramid that represents the volume of space the object is likely to occupy. By limiting the search for the object in the LIDAR data to this frustum, the system significantly reduces the computational resources needed for annotation.

This approach differs from prior methods that either require manual annotation of the 3D data or apply annotation models to the entire 3D point cloud. By using the 2D image annotation to constrain the search space, ’457 improves the accuracy and efficiency of the 3D annotation process. This is particularly beneficial for training computer models used in autonomous vehicles, where large amounts of accurately labeled data are essential for reliable performance. The constrained search avoids the problem of annotation models assigning high likelihoods to incorrect regions outside the actual location of the object.

How does this patent fit in bigger picture?

Technical landscape at the time

In the late 2010s when ’457 was filed, autonomous systems commonly relied on sensor data such as images and point clouds. At a time when training data was typically annotated by human operators or annotation models, hardware or software constraints made annotating 3D data from LIDAR sensors non-trivial due to the format, size, and complexity of the data.

Novelty and Inventive Step

The examiner allowed the claims because the prior art fails to teach or suggest using a spatial region representing a frustum extending from a first sensor's viewpoint through the boundaries of a first bounding box. The prior art also does not teach annotating sensor measurements within this spatial region to generate a second annotation identifying a second bounding box associated with a characteristic object in 3D space, where the second bounding box is identified by searching within a subset of the 3D space.

Claims

This patent contains 20 claims, with independent claims 1, 11, and 19. The independent claims are directed to a method, a non-transitory computer-readable storage medium, and a system, respectively, all generally focused on annotating sensor measurements using image data to identify objects in a 3D space. The dependent claims generally elaborate on and refine the elements and steps recited in the independent claims.

Key Claim Terms New

Definitions of key terms used in the patent claims.

Litigation Cases New

US Latest litigation cases involving this patent.

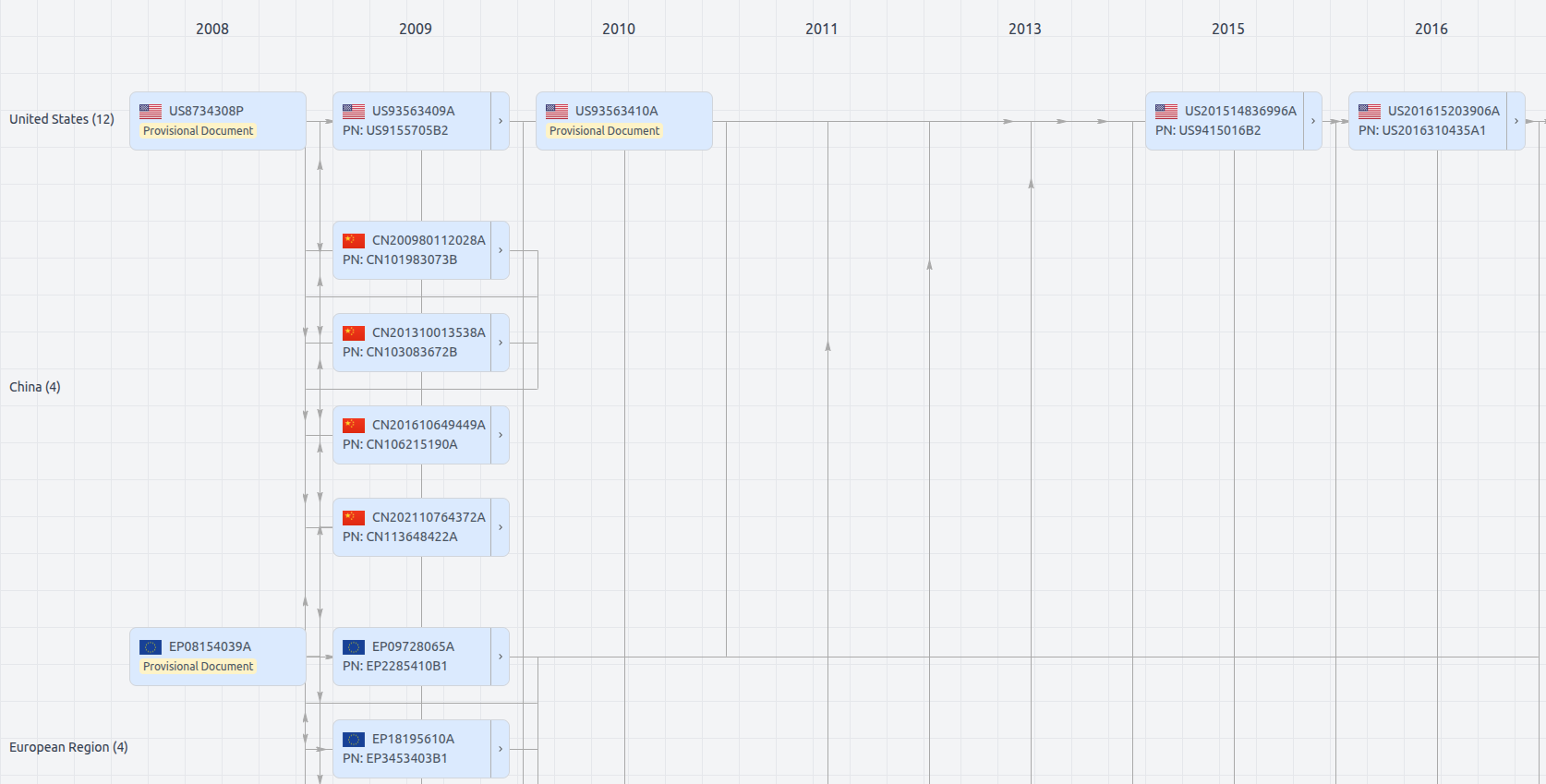

Patent Family

File Wrapper

The dossier documents provide a comprehensive record of the patent's prosecution history - including filings, correspondence, and decisions made by patent offices - and are crucial for understanding the patent's legal journey and any challenges it may have faced during examination.

Date

Description

Get instant alerts for new documents

US11361457

- Application Number

- US16514721

- Filing Date

- Jul 17, 2019

- Status

- Granted

- Expiry Date

- Sep 27, 2039

- External Links

- Slate, USPTO, Google Patents