Optimizing Neural Network Structures For Embedded Systems

Patent No. US11636333 (titled "Optimizing Neural Network Structures For Embedded Systems") was filed by Tesla Inc on Jul 25, 2019.

What is this patent about?

’333 is related to the field of autonomous control systems, specifically the generation and deployment of machine-learned models for embedded systems within vehicles. These systems analyze the surrounding environment to guide vehicles safely, often using computer models trained on large datasets. However, training these models is computationally expensive and time-consuming, especially when exploring different model architectures for various target platforms.

The underlying idea behind ’333 is to create a model training pipeline that efficiently selects and trains machine learning models tailored to the specific capabilities of individual embedded systems. This is achieved by generating an intermediate representation of the model, evaluating its performance *before* full training, and iteratively refining the model architecture based on these pre-training performance estimates. This approach avoids wasting resources on training models that are unsuitable for the target platform.

The claims of ’333 focus on a method, system, and non-transitory computer readable media for generating a machine-learned model. The process involves generating an untrained model, creating an intermediate representation of it compatible with a virtual machine, and evaluating its performance in a target system. This evaluation includes determining latency, frequency of application, resource usage, and power consumption. The process iteratively generates and evaluates new untrained models, selects a subset based on performance, trains them, evaluates their accuracies, and selects a final model for deployment.

The invention works by first generating a model and compiling it into an intermediate representation that is independent of the target platform's specific hardware. This representation is then fed into a virtual machine, which translates it into machine code optimized for the target processor (CPU, GPU, or DSP). A performance evaluator estimates the model's performance based on both the intermediate representation and the generated machine code, considering factors like memory usage, computational complexity, and power consumption. This allows for rapid exploration of different model architectures and configurations without the overhead of full training.

A key differentiation from prior approaches is the emphasis on pre-training performance estimation . Instead of fully training every model candidate, the pipeline uses the intermediate representation and virtual machine to predict performance characteristics *before* training. This allows the system to quickly discard unsuitable models and focus resources on training only the most promising candidates. Furthermore, the use of an intermediate representation enables deployment across a variety of embedded platforms, as long as a suitable virtual machine and kernel set are available for that platform.

How does this patent fit in bigger picture?

Technical landscape at the time

In the late 2010s when ’333 was filed, machine learning models were increasingly being deployed on embedded systems at a time when hardware constraints made efficient model execution non-trivial. At a time when X was typically implemented using Y, model training often occurred on powerful servers, while inference was performed on resource-constrained devices. When systems commonly relied on Z rather than A, the translation of complex models into a format suitable for embedded systems was a significant challenge.

Novelty and Inventive Step

The examiner approved the application because the prior art did not teach or make obvious generating an untrained model, creating an intermediate representation of it compatible with a virtual machine, evaluating the untrained model's performance (including latency, frequency, resource usage, and power consumption), iteratively generating and evaluating new untrained models based on previous models' performance, selecting a subset of models, training them, evaluating their accuracies, and selecting a model for deployment based on those accuracies. The examiner stated that the prior art failed to render obvious iteratively generating and evaluating new untrained models, wherein individual new untrained models are generated based on performance of one or more previous untrained models; select a subset of models based on the performance of the generated models; train the selected subset of models; evaluate respective accuracies of the subset of models; and select a particular model of the subset of models for deployment to the target system based on the accuracies, when the performance of untrained models is determined by evaluation of the performance of the untrained models that includes at least one of determining a latency in applying the untrained model in a target system, determining a frequency at which the untrained model can be applied in the target system, determining an amount of resources used by the untrained model, and determining an amount of power consumed by the target system using the untrained model, and an intermediate representation of the generated untrained model is generated that is compatible with a virtual machine, as required by the amended independent claims.

Claims

This patent contains 24 claims, of which claims 1, 9, and 17 are independent. The independent claims are directed to a method, a system, and computer readable media for generating a machine-learned model. The dependent claims generally elaborate on the specifics of the model generation, evaluation, and selection processes described in the independent claims.

Key Claim Terms New

Definitions of key terms used in the patent claims.

Litigation Cases New

US Latest litigation cases involving this patent.

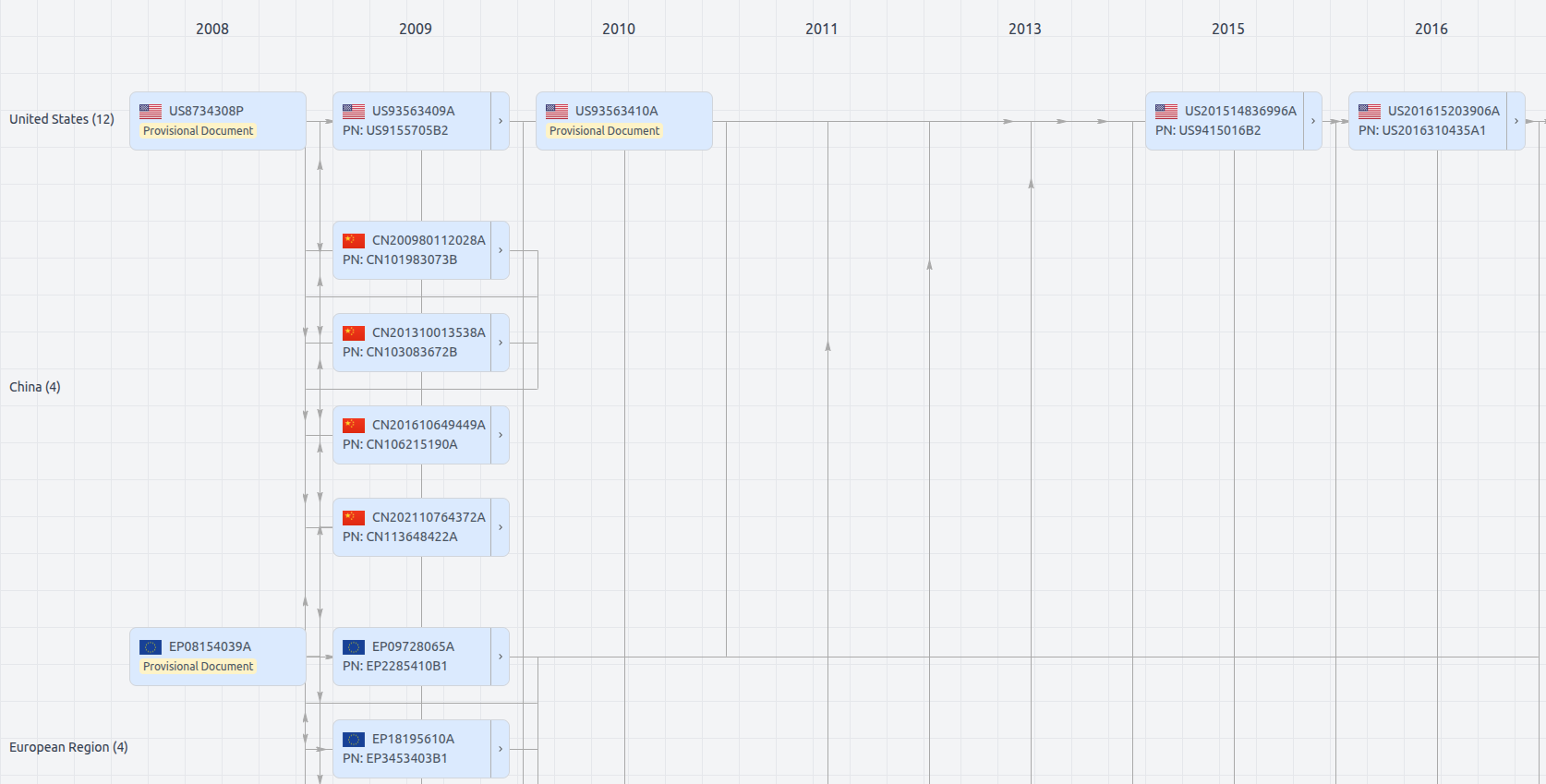

Patent Family

File Wrapper

The dossier documents provide a comprehensive record of the patent's prosecution history - including filings, correspondence, and decisions made by patent offices - and are crucial for understanding the patent's legal journey and any challenges it may have faced during examination.

Date

Description

Get instant alerts for new documents

US11636333

- Application Number

- US16522411

- Filing Date

- Jul 25, 2019

- Status

- Granted

- Expiry Date

- Nov 26, 2041

- External Links

- Slate, USPTO, Google Patents