Data Pipeline And Deep Learning System For Autonomous Driving

Patent No. US11734562 (titled "Data Pipeline And Deep Learning System For Autonomous Driving") was filed by Tesla Inc on Dec 16, 2021.

What is this patent about?

’562 is related to the field of autonomous vehicle control systems, specifically those employing deep learning. Modern self-driving systems rely on sensor data, such as camera images, to perceive the environment. Traditional approaches feed the entire image into a neural network. However, this can be inefficient because different parts of the image contain different types of information that are best processed at different stages of the network. The patent addresses the problem of efficiently processing sensor data in deep learning systems for autonomous driving.

The underlying idea behind ’562 is to decompose a captured image into different component images based on their signal characteristics and feed these components into different layers of a convolutional neural network. Instead of feeding the entire image into the first layer, the image is split into a feature data component (containing edge information) and a global data component (containing global illumination data). The feature data is fed into the initial layer, while the global data is fed into a later layer, along with the intermediate result from a prior layer.

The claims of ’562 focus on a method, computer program product, and system for processing images in an autonomous vehicle. The independent claims cover receiving an image, extracting a global data component and a feature data component, providing these components as input to different layers of a convolutional neural network, and obtaining a vehicle control result based on the network's output. Specifically, the feature data component is input to the first layer, and the global data component, along with the output from a prior layer, is input to a subsequent layer.

In practice, the system captures an image using a camera on the vehicle. This image is then processed to extract the feature and global data components. A high-pass filter can be used to extract the feature data, while a low-pass filter can be used to extract the global data. The feature data, highlighting edges and textures, is fed into the first layer of the convolutional neural network, which is designed to detect local features. The global data, representing overall illumination and context, is fed into a later layer, allowing the network to integrate global context with local feature information.

This approach differs from prior methods that feed the entire image into the first layer of the network. By separating the image into components and feeding them into different layers, the system can more efficiently process the data. The initial layers can focus on detecting edges and features, while later layers can integrate global context. This can lead to improved accuracy and reduced computational requirements, as the global data component can be down-sampled without significant loss of information, reducing the computational load on the later layers.

How does this patent fit in bigger picture?

Technical landscape at the time

In the late 2010s when ’562 was filed, autonomous driving systems were at a stage when sensor data processing was a significant bottleneck. At a time when deep learning was increasingly used for autonomous driving, systems commonly relied on converting raw sensor data into a format suitable for the initial input layer of a neural network. This conversion often involved compression and down-sampling, which could reduce the fidelity of the original sensor data. Furthermore, adapting to different sensor types required developing new conversion processes.

Novelty and Inventive Step

The examiner allowed the claims because the prior art, taken individually or together, did not teach providing both global illumination data and edge data extracted from an image as input to a convolutional neural network that includes multiple sequential layers. Specifically, the feature data component is provided as input to the first layer, while the global data component and an intermediate result from a prior layer are provided as input to a second layer.

Claims

This patent contains 20 claims, of which claims 1, 11, and 16 are independent. The independent claims are directed to a method, a computer program product, and a system, respectively, all generally relating to using a convolutional neural network with global and feature data components from an image to inform autonomous vehicle operation. The dependent claims generally add limitations or details to the independent claims, further defining the method, computer program product, or system.

Key Claim Terms New

Definitions of key terms used in the patent claims.

Litigation Cases New

US Latest litigation cases involving this patent.

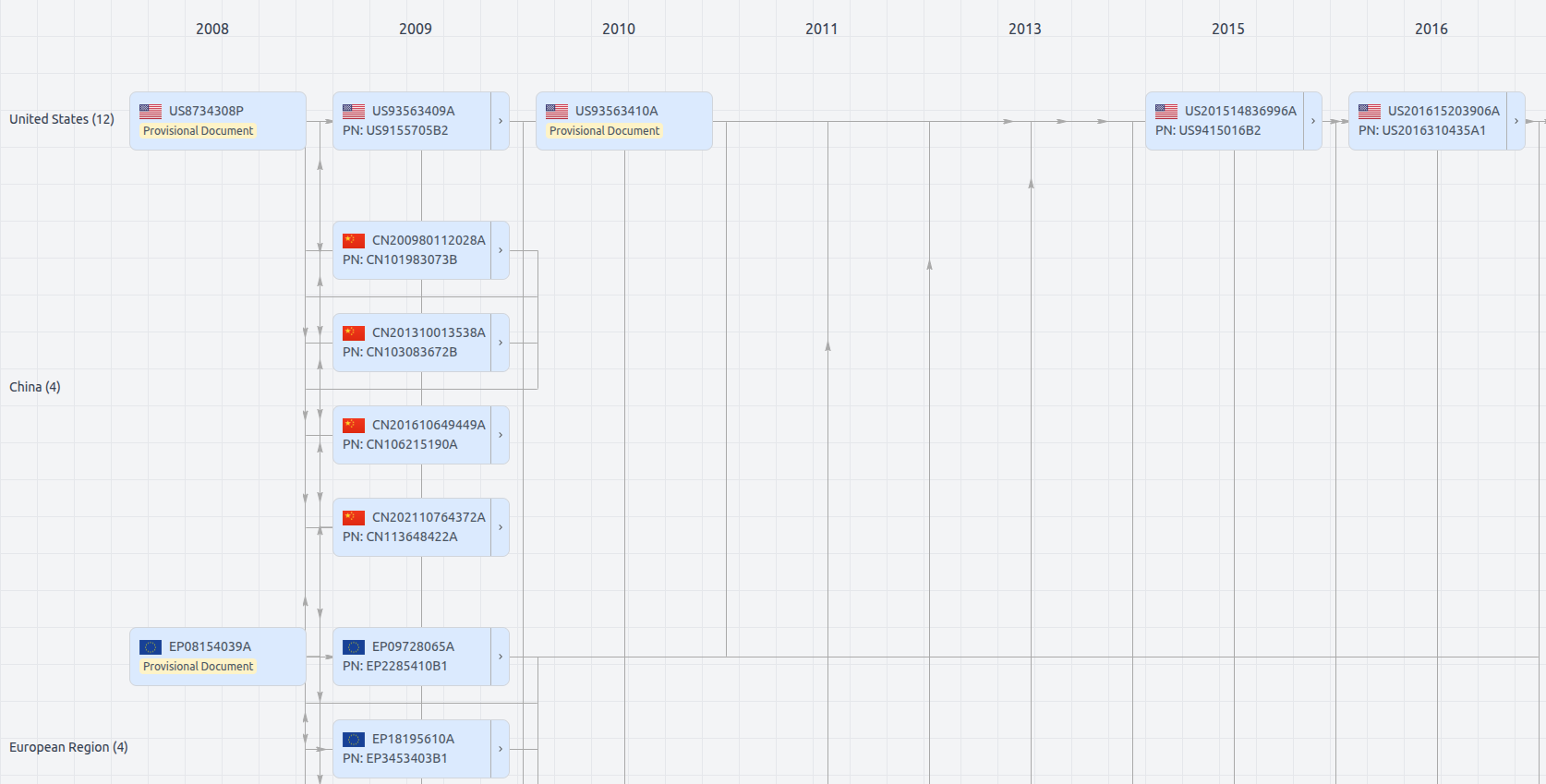

Patent Family

File Wrapper

The dossier documents provide a comprehensive record of the patent's prosecution history - including filings, correspondence, and decisions made by patent offices - and are crucial for understanding the patent's legal journey and any challenges it may have faced during examination.

Date

Description

Get instant alerts for new documents

US11734562

- Application Number

- US17644748

- Filing Date

- Dec 16, 2021

- Status

- Granted

- Expiry Date

- Aug 6, 2038

- External Links

- Slate, USPTO, Google Patents