Music Streaming, Playlist Creation And Streaming Architecture

Patent No. US11899713 (titled "Music Streaming, Playlist Creation And Streaming Architecture") was filed by Muvox Llc on Jan 5, 2023.

What is this patent about?

’713 is related to the field of music streaming services, specifically addressing the challenge of music publishers providing streamed music to end users in a non-programmatic way, allowing for personalized playlists. Existing services often lack a way for publishers to directly engage users and ensure they are aware of the source of the music, while also struggling to efficiently categorize and recommend music based on objective criteria.

The underlying idea behind ’713 is to use computer-derived rhythm, texture, and pitch (RTP) scores to categorize music tracks. These scores are derived from analyzing low-level data extracted from the tracks, which are then mapped to high-level acoustic attributes. This allows for objective categorization and playlist creation based on musical characteristics rather than subjective human tagging. The system also aims to create a universal database of RTP scores to avoid redundant analysis by different music publishers.

The claims of ’713 focus on selecting a song based on a computer-derived comparison between a representation of the song and known similarities in representations of other songs. This comparison relies on a human-trained machine that uses representations of other songs, where the representation of the song is based on isolating and identifying frequency characteristics. The selection is based on the similarity between the moods of the song and the moods of the other songs.

In practice, the system involves extracting low-level data from music tracks, analyzing this data to develop high-level acoustic attributes, and then using these attributes to generate RTP scores. These scores are then used to categorize the tracks and create collections that can be accessed by end-user applications. A key aspect is the use of a universal database where RTP scores are stored, allowing different music publishers to share this information and avoid redundant analysis. This database is accessed through an API server, ensuring controlled access and data integrity.

The system differentiates itself from prior approaches by using objective, computer-derived RTP scores for music categorization, rather than relying solely on human tagging or genre classifications. The human-trained machine aspect is also important, as it allows the system to learn and improve its categorization accuracy over time. Furthermore, the universal database and API server architecture enables efficient sharing of RTP scores among different music publishers, reducing redundancy and improving the overall efficiency of the music streaming ecosystem.

How does this patent fit in bigger picture?

Technical landscape at the time

In the mid-2010s when ’713 was filed, music streaming services were prevalent, at a time when users typically interacted with these services through dedicated applications or web interfaces. Systems commonly relied on large catalogs of licensed music tracks, and hardware or software constraints made personalized playlist generation based on detailed audio analysis non-trivial.

Novelty and Inventive Step

The examiner allowed the application because the claims overcome prior art rejections. While prior art teaches selecting songs based on musical characteristics and filtering similar songs based on criteria like mood and frequency, it does not explicitly teach that similarities in digitized representations of songs are based on a human-trained machine listening to each song to isolate and identify frequency characteristics. The claims explicitly recite this feature.

Claims

This patent contains 20 claims, with independent claims 1 and 16. The independent claims are directed to a method and a system for selecting a song based on computer-derived comparisons of song representations and known similarities, considering frequency characteristics and moods. The dependent claims generally elaborate on the method and system, providing details about representations, audio fingerprints, frequency data analysis, mood correlation, and song attributes.

Key Claim Terms New

Definitions of key terms used in the patent claims.

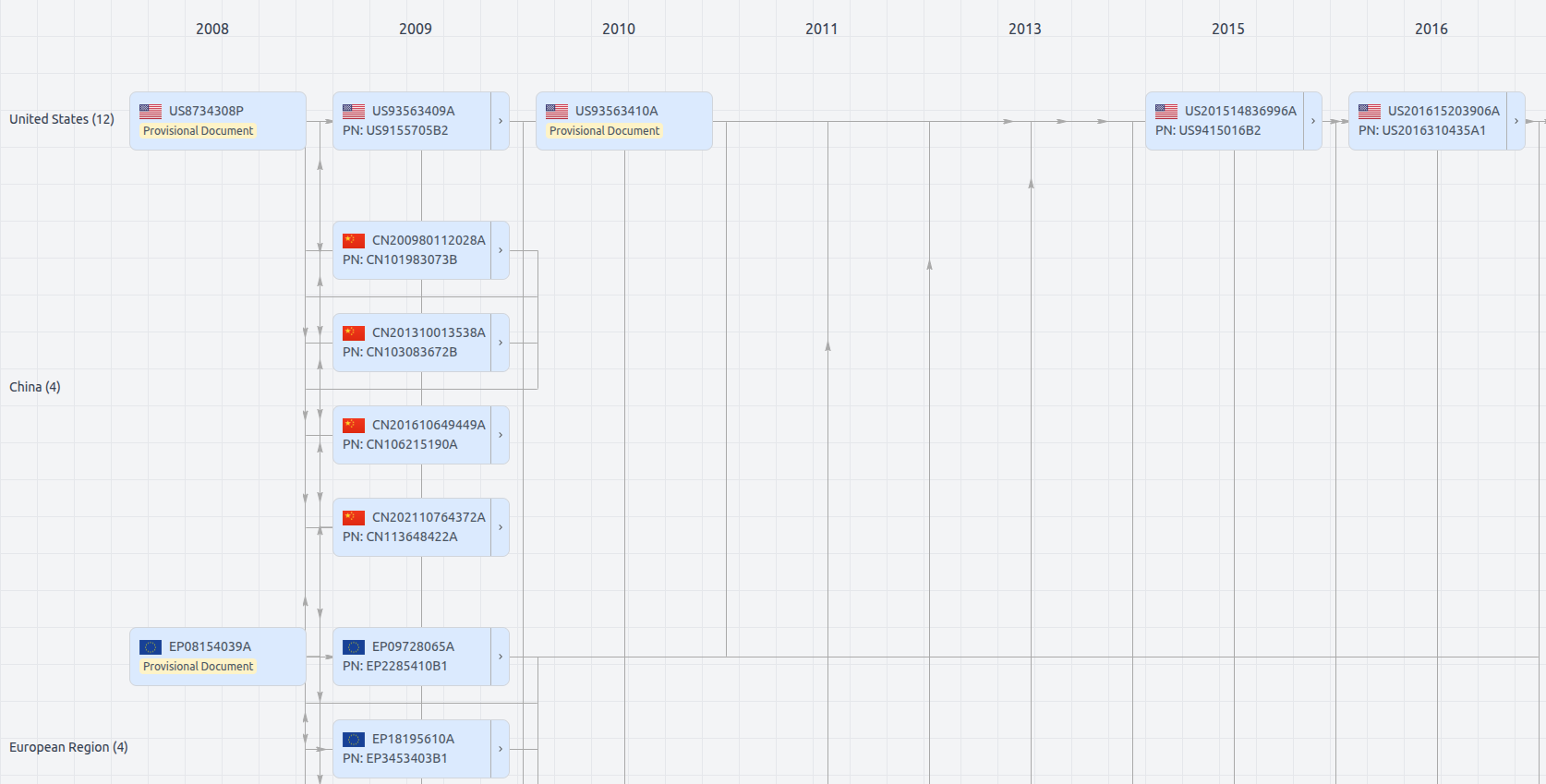

Patent Family

File Wrapper

The dossier documents provide a comprehensive record of the patent's prosecution history - including filings, correspondence, and decisions made by patent offices - and are crucial for understanding the patent's legal journey and any challenges it may have faced during examination.

Date

Description

Get instant alerts for new documents

US11899713

- Application Number

- US18150728

- Filing Date

- Jan 5, 2023

- Status

- Granted

- Expiry Date

- Jan 22, 2035

- External Links

- Slate, USPTO, Google Patents